Goals and Background

The main goal of this lab is to gain the basic knowledge and understanding of performing basic preprocessing and processing of radar imagery. The main objectives are as follows:

1) Reducing noise using a speckle filter

2) Spectral and spatial enhancement

3) Multi-sensor fusion

4) Texture analysis

5) Polarimetric processing

6) Slant range to ground range conversion

Methods

For this lab due to the large amount of tools and techniques used the results from each tool will be included in the section discussing that tool not in a results section as I have done for most of my other posts. This is done for ease of comparison of before and after the tools and techniques are used.

Part 1: Speckle reduction and edge enhancement

Section 1: Speckle filtering

In order to conduct speckle reduction we made use of the radar speckle suppression tool in ERDAS Imagine. We ran this tool three times using the subsequent speckle reduced image as the input for each process. The parameters for the speckle tool each time are as follows as each time the tool was run the parameters were changed slightly:- Coef. of Var. Multiplier = .5

- Output Option= Lee-Sigma

- Coef. of Variation= .275

- Window/filter size= 3m x 3m

- Coef. of Var. Multiplier = 1.0

- Output Option= Lee-Sigma

- Coef. of Variation= .195

- Window/filter size= 5m x 5m

- Coef. of Var. Multiplier = 2.0

- Output Option= Lee-Sigma

- Coef. of Variation= .103

- Window/filter size= 7m x 7m

The speckle was run multiple times to decrease the speckle effect each time in turn increasing the quality of the imagery and making it more user friendly (Figures 1 and 2).

|

| Figure 1 This is the original image with a high amount of speckle. |

|

| Figure 2 This is the despeckled image after running through the speckle processing three times. |

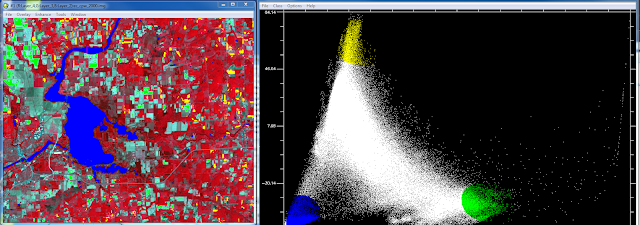

The easiest way to see the effect the speckle filter is having is by looking at the histogram of the radar. A histogram with a lot of speckle will have spikes all over and will most likely be very Gaussian or only one hump. The more times the speckle tool is run the less spikes on the histogram there should be and it will become multi-modal or have multiple bumps as it better separates between pixel values in the imagery. This can be seen below in Figure 3.

Section 2: Edge enhancement

Next I performed edge enhancement. Figure 4 is the resulting image. This is done in ERDAS by selecting raster-> spatial-> non-directional edge.

|

| Figure 4 This is the image after the edge enhancement has been run. |

Section 3: Image enhancement

In this section of the lab we made use of several image enhancement techniques. The first of which is called the Wallis adaptive filter. This is done by again running the radar speckle suppression but change the filter to gamma-map. I then used that resulting image and went raster-> spatial-> adaptive filter. In the parameter window unsigned 8-bit is selected and make the sure the window size is 3 by 3 with a multiplier of 3.0. Figure 5 is the original image next to the enhanced image.

|

| Figure 5 The original image is on the left and the enhanced image using the adaptive filter is on the right. |

Part 2: Sensor Merge, texture analysis and brightness adjustment

Section 1: Apply Sensor Merge

Another enhancement technique is using sensor merge. This tool allows the user to take two images of the same area such as a radar and multi spectral and combine them into one image. It is used to help extract data from an area when the imagery from a single sensor is not good enough due to atmospheric interference and the like. In this lab we are combining a radar image and Landsat TM image which has pretty dense cloud cover over portions of it. The steps to conduct this analysis are as follows: raster-> utilities-> sensor merge. Once here the parameter window will open where the following parameters are set:

- Method= IHS

- Resampling technique= Nearest neighbor

- IHS substitution= Intensity

- R = 1, G = 2, B = 3

- Unsigned 8-bit box is checked

Figure 6 are the two original input images or the study area and Figure 7 is the result of running the sensor merge tool.

|

| Figure 6 The image on the left is the radar and the image on the right is the Landsat TM image. |

|

| Figure 7 This is the resulting image from running the sensor merge tool. |

Section 2: Apply Texture Analysis

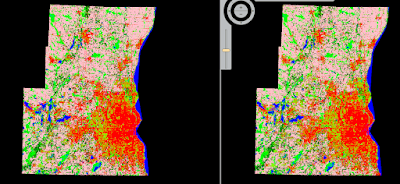

Another image enhancement tool we explored was the texture analysis tool. This tool is run by going to raster-> utilities-> texture analysis. Once the parameter dialogue box is open the operator should be set to skewness and the the window or filter size should be 5 x 5. Figure 8 shows the original image we used as the input compared to the output image after the texture tool is run.

|

| Figure 8 The original image is on the left and the texture analysis image is on the right. |

Section 3: Brightness Adjustment

The brightness adjustment tool is set up in a similar manner as the texture analysis. Using the same input image as the texture analysis, open raster-> utilities-> brightness adjustment and the parameter dialogue box will open. Once open the data type is set to float single and the output options should be set to column. Figure 9 is a comparison of before and after the brightness adjustment tool is run.

|

| Figure 9 The image on the left is the original image and the image on the right the new image after brightness adjustment is run. |

Part 3: Polarimetric SAR Processing and Analysis

Section 1. Synthesize Images

The final portion of this lab was done in ENVI another remote sensing software. All of the imagery used in this portion of lab is not the raw radar data. The imagery has been preprocessed and made ready to use by our professor before hand. The radar imagery that was used is portions of Death Valley.

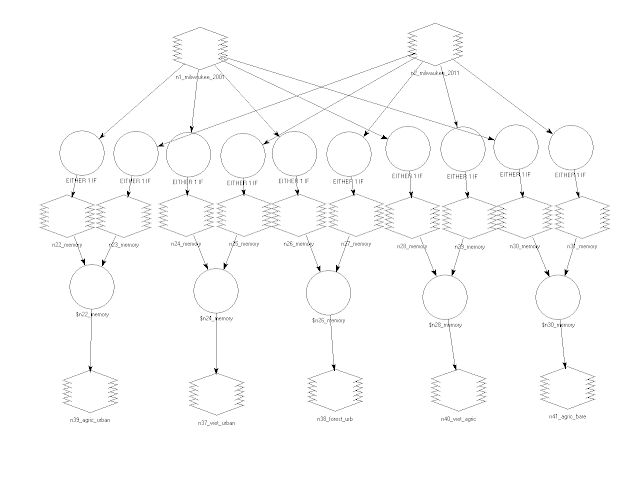

The data we are used was collected via the SIR-C radar system and were given to use in a compressed format. In order for the us to be able to use the imagery mathematical synthesis had to be conducted. This is done by going to radar-> polarimetric tools-> synthesize SIR-C data. The dialogue window will open. In this window the output data type is changed to byte and I hit OK. We also added 4 polarization combinations under the add combination button. The next step was to view the image and test how different histogram stretching methods effect the appearance of the image. This is done by going to enhance-> interactive stretch on the image viewer window. The 3 types of stretch explored were Gaussian, Linear and Square-root. Figures 10-13 are the images with each of these histogram stretches applied.

The final piece of this lab is looking at how to transform slant range to ground range. This transformation is necessary because of the fact that radar imagery is collected at an angle not in a nadir position like most aerial imagery. Converting from slant range to ground range dramatically decreases skew, stretching and other distortion in the imagery. |

| Figure 10 This the Death Valley image with a Gaussian stretch applied to the histogram. |

|

| Figure 11 This is the image with a linear stretch applied. |

|

| Figure 12 This is the same image with a square-root stretch applied. |

Part 4. Slant-to-Ground Range Transformation

This tool is accessed by going to radar-> slant to ground range-> SIR-C. The input image is selected which in this case is the same image of Death Valley used for the synthesis above. Once selected the parameter dialogue box opens and the output pixel size should be set to 13.32 and the resampling method should be set to bilinear. Figure 15 is the slant to ground range image compared to the original.

|

| Figure 15 The image on the left is the original image and the image on the right is the slant to ground range corrected image. |

Sources

All image processing was done using Erdas Imagine, 2015 and ENVI, 2015.